Author: prateek, roshan, siddhartha & linguine (Marlin), krane (Asula) Compiler: Shew, GodRealmX

Trusted Execution Environments (TEEs) have become increasingly popular since Apple announced its private cloud and NVIDIA provided confidential computing in its GPUs. Their confidentiality guarantees help protect user data (which may include private keys), while isolation ensures that the execution of programs deployed on them cannot be tampered with—whether by humans, other programs, or the operating system. Therefore, it is not surprising that the Crypto x AI field uses TEEs extensively to build products.

Like any new technology, TEEs are going through a period of optimistic experimentation. This article hopes to provide developers and general readers with a basic conceptual guide to what TEEs are, their security model, common vulnerabilities, and best practices for using TEEs securely. (Note: To make the text easy to understand, we have consciously replaced TEE terms with simpler equivalents).

What is TEE

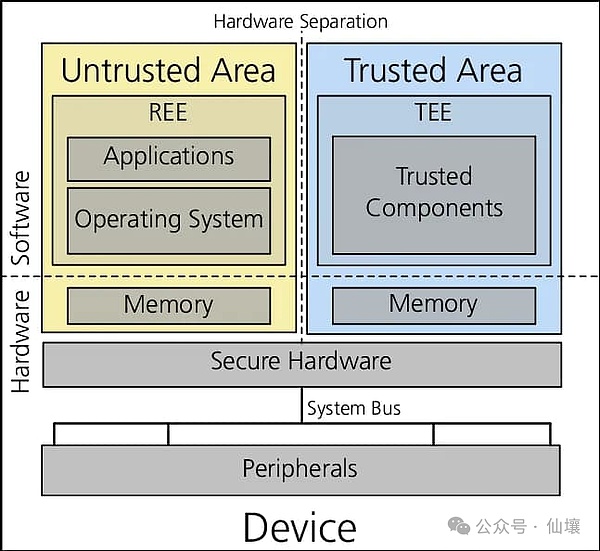

TEE is an isolated environment in a processor or data center where programs can run without any interference from the rest of the system. To prevent TEE from being interfered with by other parts, we need a series of designs, mainly including strict access control, that is, controlling the access of other parts of the system to programs and data within the TEE. Currently, TEE is ubiquitous in mobile phones, servers, PCs, and cloud environments, so it is very easy to access and affordable.

The above may sound vague and abstract. In fact, different server and cloud vendors implement TEE in different ways, but the fundamental purpose is to prevent TEE from being interfered with by other programs.

Most readers may use biometric information to log in to the device, such as unlocking the phone with a fingerprint. But how can we ensure that malicious applications, websites, or jailbroken operating systems cannot access and steal this biometric information? In fact, in addition to encrypting the data, the circuits in the TEE device do not allow any program to access the memory and processor areas occupied by sensitive data.

Hardware wallets are another example of TEE application scenarios. The hardware wallet is connected to the computer and communicates with it in a sandbox, but the computer cannot directly access the mnemonics stored in the hardware wallet. In both cases, users trust that the device manufacturer can correctly design the chip and provide appropriate firmware updates to prevent the confidential data in the TEE from being exported or viewed.

Security Model

Unfortunately, there are many types of TEE implementations, and these different implementations (IntelSGX, IntelTDX, AMDSEV, AWSNitroEnclaves, ARMTrustZone) all require independent security model modeling and analysis. In the rest of this article, we will mainly discuss IntelSGX, TDX, and AWSNitro, because these TEE systems have more users and complete available development tools. The above system is also the most commonly used TEE system in Web3.

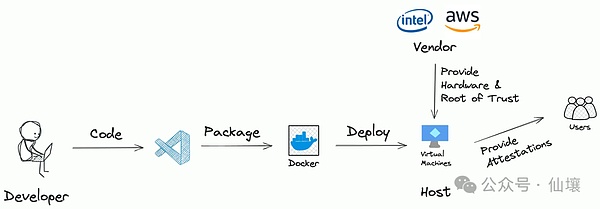

Generally speaking, the workflow of an application deployed in TEE is as follows:

The "developer" writes some code, which may or may not be open source

Then, the developer packages the code into an Enclave Image File (EIF), which can be run in the TEE

The EIF is hosted on a server with a TEE system. In some cases, developers can directly use a personal computer with a TEE to host the EIF to provide services to the outside world

Users can interact with the application through a predefined interface.

Obviously, there are three potential risks here:

Developer:What is the purpose of the code used to prepare EIF? The EIF code may not conform to the business logic promoted by the project party and may steal the user's private data

Server:Does the TEE server run the EIF file as expected? Or is the EIF really executed in the TEE? The server may also run other programs in the TEE

Vendor:Is the design of TEE secure? Is there a backdoor that leaks all the data in the TEE to the supplier?

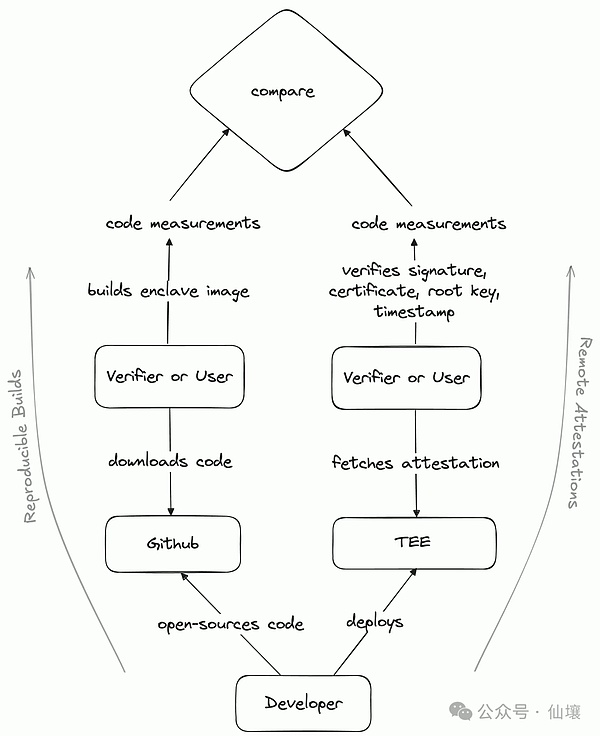

Fortunately, the current TEE already has solutions to eliminate the above risks, namely Reproducible Builds and Remote Atteststations.

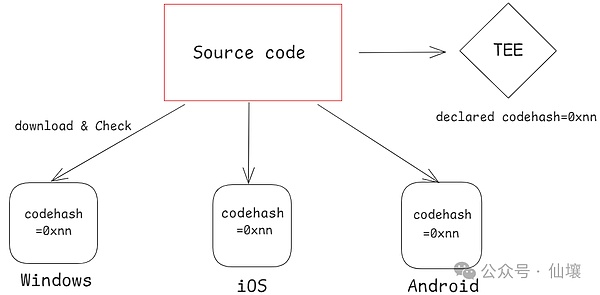

So what is a reproducible build? Modern software development often requires the import of a large number of dependencies, such as external tools, libraries, or frameworks, and these dependent files may also have hidden dangers. Now solutions such as npm use the code hash corresponding to the dependent file as a unique identifier. When npm finds that a dependent file is inconsistent with the recorded hash value, it can be considered that the dependent file has been modified.

Repeatable builds can be considered as a set of standards, with the goal that when any code runs on any device, as long as it is built according to the pre-defined process, a consistent hash value can be obtained in the end. Of course, in practice, we can also use products other than hashes as identifiers, which we call code measurements here.

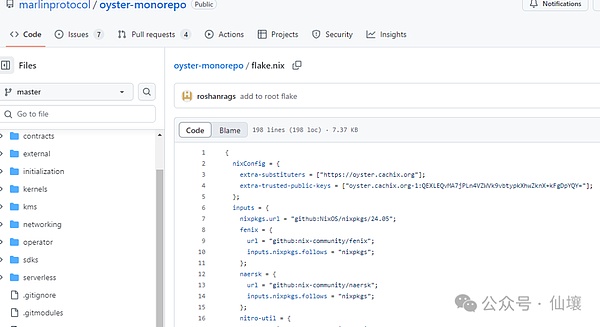

Nix is a common tool for repeatable builds. When the source code of a program is made public, anyone can check the code to ensure that the developer has not inserted any abnormal content. Anyone can use Nix to build the code and check whether the built product has the same code measurement/hash as the product deployed by the project party in the production environment. But how do we know the code measurement value of the program in the TEE? This involves a concept called "remote proof".

Remote attestation is a signed message from the TEE platform (trusted party) that contains the program's code measurement value, TEE platform version, etc. Remote attestation lets external observers know that a program is being executed in a secure location (the real TEE of version xx) that is inaccessible to anyone.

Repeatable builds and remote attestation allow any user to know the actual code running in the TEE and the TEE platform version information, thereby preventing developers or servers from doing evil.

However, in the case of TEE, it is always necessary to trust its vendor. If the TEE vendor does evil, remote proofs can be directly forged. Therefore, if the vendor is considered a possible attack vector, you should avoid relying solely on TEEs, and it is best to combine them with ZK or consensus protocols.

The charm of TEE

In our opinion, the particularly popular features of TEE, especially its deployment-friendliness for AI Agents, mainly lie in the following points:

Performance:TEE can run LLM models, and its performance andcost overhead are similar to those of ordinary servers. ZkML, on the other hand, requires a lot of computing power to generate zk proofs for LLM

GPU support: NVIDIA provides TEE computing support in its latest GPU series (Hopper, Blackwell, etc.)

Correctness: LLMs are non-deterministic; entering the same prompt word multiple times will give different results. Therefore, multiple nodes (including observers who try to create fraud proofs) may never reach a consensus on the results of the LLM operation. In this scenario, we can trust that the LLM running in the TEE cannot be manipulated by malicious actors, and the program in the TEE always runs as written, which makes TEE more suitable than opML or consensus to ensure the reliability of LLM reasoning results

Confidentiality:The data in the TEE is not visible to external programs. Therefore, private keys generated or received in a TEE are always safe. This feature can be used to assure users that any message signed by that key comes from a program inside the TEE. Users can trust their private keys to the TEE and set some signing conditions, and they can confirm that the signatures from the TEE meet the pre-set signing conditions. Networking: Through some tools, programs running in the TEE can securely access the Internet (without revealing queries or responses to the servers running the TEE, while still providing third parties with assurances of correct data retrieval). This is very useful for retrieving information from third-party APIs, and can be used to outsource computation to trusted but proprietary model providers

Write permissions:In contrast to the zk solution, code running in the TEE can construct messages (whether tweets or transactions) and send them out through API and RPC network access

Developer-friendly:TEE-related frameworks and SDKs allow people to write code in any language and easily deploy programs to TEEs like in cloud servers

For better or worse, quite a few use cases for using TEEs are currently difficult to find alternatives. We believe that the introduction of TEEs further expands the development space for on-chain applications, which may drive the emergence of new application scenarios.

TEEs are not a silver bullet

Programs running in TEEs are still vulnerable to a range of attacks and bugs.Just like smart contracts, they are susceptible to a range of problems. For simplicity, we categorize possible vulnerabilities as follows:

Developer negligence

Whether intentional or unintentional, developers can weaken the security guarantees of programs in TEEs through deliberate or unintentional code. This includes:

Opaque code:The security model of the TEE relies on external verifiability. Transparency of the code is critical for verification by external third parties.

Problems with code metrics:Even if the code is public, it is not possible to verify the code without a third party rebuilding the code and checking the code metrics in the remote attestation and then checking against the code metrics provided in the remote attestation. This is similar to receiving a zk proof but not verifying it.

Insecure code:Even if you are careful to generate and manage keys correctly in the TEE, the logic contained in the code may leak keys within the TEE during external calls.In addition, the code may contain backdoors or vulnerabilities. It requires a high standard of software development and auditing process compared to traditional backend development, similar to smart contract development.

Supply Chain Attacks:Modern software development uses a lot of third-party code. Supply Chain Attacks pose a significant threat to the integrity of the TEE.

Runtime Vulnerabilities

Developers can fall victim to runtime vulnerabilities even when they are careful. Developers must carefully consider whether any of the following will affect the security assurance of their projects:

Dynamic Code:It may not always be possible to keep all code transparent. Sometimes, the use case itself requires the dynamic execution of opaque code loaded into the TEE at runtime. Such code can easily leak secrets or break invariants, and great care must be taken to prevent this.

Dynamic Data:Most applications use external APIs and other data sources during their execution. The security model is extended to include these data sources, which are on the same footing as oracles in DeFi, where incorrect or even outdated data can lead to disasters. For example, in the case of AI Agents, over-reliance on LLM services such as Claude.

Insecure and unstable communication:TEEs need to run within a server that contains the TEE components. From a security perspective,the server running the TEE is actually the perfect man-in-the-middle (MitM) between the TEE and external interactions. The server is not only able to peek into the TEE's outbound connections and see what is being sent, but it can also censor specific IPs, restrict connections, and inject packets into the connection designed to trick one party into thinking it is coming from xx.

For example, running a matching engine that can process encrypted transactions in a TEE cannot provide fair ordering guarantees (anti-MEV) because routers/gateways/hosts can still drop, delay, or prioritize packets based on the IP address they originated from.

Architectural flaws

The technology stack used by TEE applications should be cautious. When building TEE applications, the following issues may arise:

Applications with large attack surfaces:The attack surface of an application refers to the number of code modules that need to be fully secure. Code with a large attack surface is very difficult to audit and may hide bugs or exploitable vulnerabilities.This is also often in conflict with the developer experience. For example, a TEE program that relies on Docker has a much larger attack surface than a TEE program that does not rely on Docker. Enclaves that rely on mature operating systems have a larger attack surface than TEE programs that use the lightest operating system

Portability and liveness:In Web3, applications must be censorship-resistant. Anyone can start a TEE and take over an inactive system participant, and make the application inside the TEE portable. The biggest challenge here is the portability of keys. Some TEE systems have a key derivation mechanism inside, but once the key derivation mechanism inside the TEE is used, then other servers cannot generate the keys inside the external TEE program locally, which makes the TEE program usually limited to the same machine, which is not enough to maintain portability

Insecure root of trust: For example, when running an AI Agent in a TEE, how to verify whether a given address belongs to the Agent? Without careful design here, the true root of trust may be an external third party or key escrow platform, rather than the TEE itself.

Operational Issues

Last but not least, there are also some practical considerations about how to actually run a server that executes TEE programs:

Insecure Platform Versions:TEE platforms occasionally receive security updates, which are reflected as platform versions in remote attestation. If your TEE is not running on a secure platform version, hackers can exploit known attack vectors to steal keys from the TEE. Worse, your TEE may be running on a secure platform version today and insecure tomorrow.

No physical security: Despite your best efforts, TEEs can be vulnerable to side-channel attacks, which typically require physical access and control of the server where the TEE resides. Physical security is therefore an important layer of defense in depth. A related concept is cloud attestation, where you can prove that the TEE is running in a cloud data center and that the cloud platform has physical security assurances.

Building a secure TEE application

We have divided our recommendations into the following points:

1. The safest approach: no external dependencies

Creating a highly secure application may involve eliminating external dependencies, such as external inputs, APIs, or services, thereby reducing the attack surface. This approach ensures that the application runs in an isolated manner, without external interactions that could compromise its integrity or security. While this strategy may limit the functional diversity of the application, it can provide extremely high security.

If the model runs locally, this level of security can be achieved for most CryptoxAI use cases.

2. Necessary Precautions to Take

Whether your application has external dependencies or not, the following are a must!

Treat TEE applications as smart contracts, not backend applications; keep updates low and test rigorously.

Building TEE programs should be done with the same rigor as writing, testing, and updating smart contracts. Like smart contracts, TEEs operate in a highly sensitive and immutable environment where errors or unexpected behavior can lead to serious consequences, including complete loss of funds. Thorough audits, extensive testing, and minimal, carefully audited updates are critical to ensuring the integrity and reliability of TEE-based applications.

Audit the code and check the build pipeline

The security of an application depends not only on the code itself, but also on the tools used in the build process.A secure build pipeline is critical to preventing vulnerabilities. TEE only guarantees that the provided code will run as expected, but cannot fix defects introduced during the build process.

To reduce risks, the code must be rigorously tested and audited to eliminate errors and prevent unnecessary information leakage. In addition, repeatable builds play a vital role, especially when the code is developed by one party and used by another. Repeatable builds allow anyone to verify that the program executed inside the TEE matches the original source code, ensuring transparency and trust.

Without repeatable builds, it is almost impossible to determine the exact content of the program executed inside the TEE, thereby compromising the security of the application.

For example, the source code of DeepWorm (a project that runs a worm brain simulation model in a TEE) is completely open. Executions within the TEE are built in a reproducible manner using Nix pipelines.

Use audited or validated libraries

When handling sensitive data within a TEE program, use only audited libraries for key management and private data handling. Unaudited libraries can expose secrets and compromise the security of your application. Prioritize well-reviewed, security-focused dependencies to maintain data confidentiality and integrity.

Always verify attestations from the TEE

Users interacting with the TEE must verify the remote attestation or verification mechanism produced by the TEE to ensure secure and trusted interactions. Without these checks, the server may manipulate responses, making it impossible to distinguish between authentic TEE output and tampered data. Remote attestation provides critical proof of the code base and configuration running in the TEE, and we can use remote attestation to determine whether the program executed within the TEE is consistent with expectations.

Specific attestations can be verified on-chain (IntelSGX, AWSNitro), off-chain using ZK proofs (IntelSGX, AWSNitro), or by the user themselves or a managed service such as t16z or MarlinHub.

3. Use case-dependent recommendations

Depending on the target use case of your application and its structure, the following tips may help make your application more secure.

Ensure that user interactions with the TEE are always performed over a secure channel

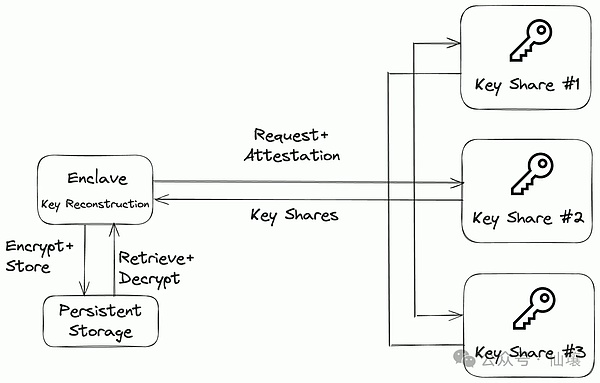

The server where the TEE resides is inherently untrusted. The server can intercept and modify communications. In some cases, it may be acceptable for the server to read the data but not change it, while in other cases, even reading the data may be undesirable. To mitigate these risks, it is critical to establish a secure, end-to-end encrypted channel between the user and the TEE. At a minimum, ensure that the message contains a signature to verify its authenticity and origin. Additionally, users need to always check that the TEE gives remote attestation to verify that they are communicating with the correct TEE. This ensures the integrity and confidentiality of the communication. For example, Oyster is able to support secure TLS issuance by using CAA records and RFC8657. In addition, it provides a TEE-native TLS protocol called Scallop that does not rely on WebPKI. Know that TEE memory is transient TEE memory is transient, which means that when the TEE is shut down, its contents, including cryptographic keys, are lost. Without a secure mechanism to save this information, critical data may become permanently inaccessible, potentially putting funds or operations at risk. Multi-party computation (MPC) networks with decentralized storage systems such as IPFS can be used as a solution to this problem. The MPC network splits the key across multiple nodes, ensuring that no single node holds the complete key while allowing the network to reconstruct the key when needed. Data encrypted with this key can be securely stored on IPFS.

If necessary, the MPC network can provide keys to new TEE servers running the same image, provided that specific conditions are met. This approach ensures resilience and strong security, keeping data accessible and confidential even in untrusted environments.

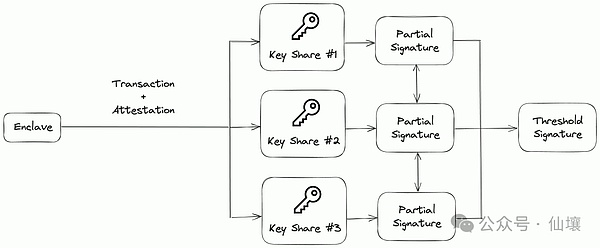

There is another solution, in which the TEE hands related transactions to different MPC servers separately, and the MPC servers sign them and aggregate the signatures and finally put the transactions on the chain. This approach is much less flexible and cannot be used to save API keys, passwords, or arbitrary data (without a trusted third-party storage service).

Reducing Attack Surface

For security-critical use cases, it is worthwhile to try to reduce as many peripheral dependencies as possible at the expense of developer experience. For example, Dstack ships with a minimal Yocto-based kernel that includes only the modules required for Dstack to work. It may even be worthwhile to use older technologies like SGX (over TDX) because that technology does not require the bootloader or operating system to be part of the TEE.

Physical Isolation

The security of the TEE can be further enhanced by physically isolating the TEE from possible human intervention.Although we can trust that the data center can provide physical security by hosting the TEE server in the data center and cloud providers. But projects like Spacecoin are exploring a rather interesting alternative — space. The SpaceTEE paper relies on security measures, such as measuring the moment of inertia after launch, to verify that the satellite did not deviate from expectations on its way into orbit.

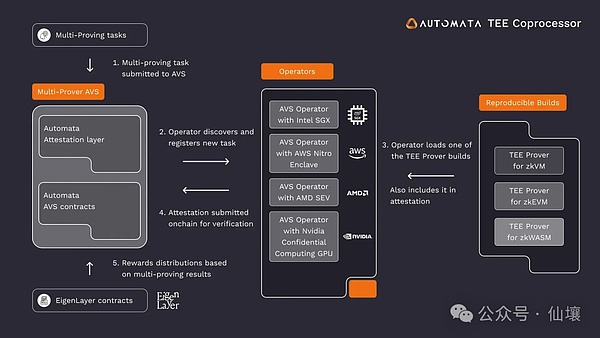

Multi-provers

Just as Ethereum relies on multiple client implementations to reduce the risk of bugs affecting the entire network, multiprovers use different TEE implementations to increase security and resilience. By running the same computation steps across multiple TEE platforms, multiprovers ensure thata vulnerability in one TEE implementation does not compromise the entire application. While this approach requires the computation process to be deterministic, or to define consensus between different TEE implementations in non-deterministic cases, it also provides significant advantages such as fault isolation, redundancy, and cross-validation, making it a good choice for applications that require reliability guarantees.

Looking to the future

TEEs have clearly become a very exciting area. As mentioned earlier, the ubiquity of AI and its continued access to user sensitive data means that large tech companies such as Apple and NVIDIA are using TEEs in their products and offering TEEs as part of their products.

On the other hand, the crypto community has always been very security-focused. As developers try to expand on-chain applications and use cases, we have seen TEEs become popular as a solution that provides the right tradeoff between functionality and trust assumptions.

While TEEs are not as trust-minimized as full ZK solutions, we expect TEEs to be the first path to slowly converge the products of Web3 companies and large tech companies.

Kikyo

Kikyo

Kikyo

Kikyo Weatherly

Weatherly Catherine

Catherine Anais

Anais Weatherly

Weatherly Catherine

Catherine Kikyo

Kikyo Anais

Anais Catherine

Catherine Weatherly

Weatherly