Comparing data redundancy on Arweave and IPFS

This article will explore the redundancy mechanisms of Arweave and IPFS, and which option is safer for your data.

JinseFinance

JinseFinance

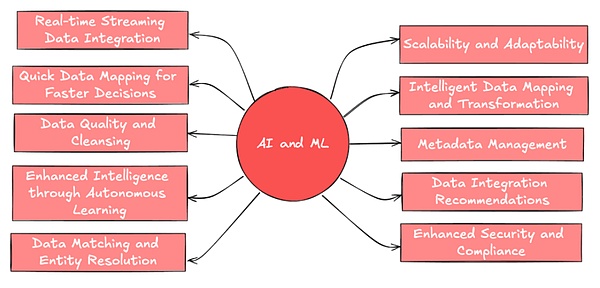

We have discussed how AI and Web3 can complement each other in various vertical industries such as computing networks, proxy platforms and consumer applications. When focusing on the vertical field of data resources, emerging representative projects on the Web provide new possibilities for the acquisition, sharing and use of data.

Traditional data providers have difficulty meeting the needs of AI and other data-driven industries for high-quality, real-time verifiable data, especially in terms of transparency, user control and privacy protection

Web3 solutions are committed to reshaping the data ecosystem. Technologies such as MPC, zero-knowledge proof and TLS Notary ensure the authenticity and privacy protection of data when it circulates between multiple sources, while distributed storage and edge computing provide higher flexibility and efficiency for real-time data processing.

Among them, the emerging infrastructure of decentralized data network has spawned several representative projects, including OpenLayer (modularized real data layer), Grass (using user idle bandwidth and decentralized crawler node network) and Vana (user data sovereignty Layer 1 network), which open up new prospects for AI training and application with different technical paths.

Through crowdsourcing capacity, trustless abstraction layer and token-based incentive mechanism, decentralized data infrastructure can provide more private, secure, efficient and economical solutions than Web2 hyperscale service providers, and give users control over their data and related resources, building a more open, secure and interoperable digital ecosystem.

Data has become a key driver of innovation and decision-making in various industries. UBS predicts that the amount of global data is expected to grow more than tenfold to 660 ZB between 2020 and 2030. By 2025, each person in the world will generate 463 EB (Exabytes, 1EB=1 billion GB) of data every day. The data-as-a-service (DaaS) market is expanding rapidly. According to a report by Grand View Research, the global DaaS market is valued at US$14.36 billion in 2023 and is expected to grow at a compound annual growth rate of 28.1% by 2030, eventually reaching US$76.8 billion. Behind these high-growth figures is the demand for high-quality, real-time, reliable data in multiple industries.

AI model training relies on a large amount of data input to identify patterns and adjust parameters. After training, data sets are also needed to test the performance and generalization ability of the model. In addition, as an emerging form of intelligent application that is foreseeable in the future, AI agent requires real-time and reliable data sources to ensure accurate decision-making and task execution.

(Source: Leewayhertz)

The demand for business analysis is also becoming diverse and extensive, and has become a core tool to drive corporate innovation. For example, social media platforms and market research companies need reliable user behavior data to formulate strategies and gain insights into trends, integrate multi-dimensional data from multiple social platforms, and build a more comprehensive portrait.

For the Web3 ecosystem, reliable and real data is also needed on the chain to support some new financial products. As more and more new assets are being tokenized, flexible and reliable data interfaces are needed to support the development of innovative products and risk management, so that smart contracts can be executed based on verifiable real-time data.

In addition to the above, there are also scientific research, the Internet of Things (IoT), and so on. New use cases show that various industries have a surge in demand for diverse, real, and real-time data, and traditional systems may have difficulty coping with the rapidly growing amount of data and changing needs.

2. Limitations and problems of traditional data ecology

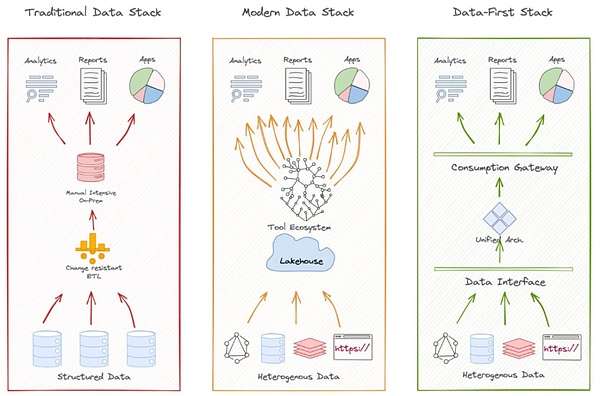

A typical data ecosystem includes data collection, storage, processing, analysis, and application. The characteristics of the centralized model are that data is collected and stored in a centralized manner, managed and maintained by the core enterprise IT team, and strict access control is implemented.

For example, Google's data ecosystem covers multiple data sources from search engines, Gmail to Android operating systems, and collects user data through these platforms, stores it in its globally distributed data centers, and then uses algorithms to process and analyze it to support the development and optimization of various products and services.

In the financial market, for example, data and infrastructure LSEG (formerly Refinitiv) obtains real-time and historical data from global exchanges, banks and other major financial institutions, while using its own Reuters News network to collect market-related news, and uses proprietary algorithms and models to generate analytical data and risk assessments as additional products.

(Source: kdnuggets.com)

Traditional data architectures are effective in professional services, but the limitations of centralized models are becoming increasingly apparent. In particular, the traditional data ecosystem is facing challenges in terms of coverage, transparency, and user privacy protection of emerging data sources. Here are a few examples:

Insufficient data coverage: Traditional data providers face challenges in quickly capturing and analyzing emerging data sources such as social media sentiment and IoT device data. Centralized systems have difficulty efficiently acquiring and integrating "long-tail" data from many small-scale or non-mainstream sources.

For example, the 2021 GameStop incident revealed the limitations of traditional financial data providers in analyzing social media sentiment. Investor sentiment on platforms such as Reddit quickly changed market trends, but data terminals such as Bloomberg and Reuters failed to capture these dynamics in a timely manner, resulting in lagging market forecasts.

Limited data accessibility: Monopoly limits accessibility. Many traditional providers open some data through API/cloud services, but high access fees and complex authorization processes still increase the difficulty of data integration.

It is difficult for on-chain developers to quickly access reliable off-chain data, and high-quality data is monopolized by a few giants, with high access costs.

Data transparency and credibility issues: Many centralized data providers lack transparency in their data collection and processing methods, and lack effective mechanisms to verify the authenticity and integrity of large-scale data. Verification of large-scale real-time data remains a complex issue, and the centralized nature also increases the risk of data tampering or manipulation.

Privacy protection and data ownership: Large technology companies have commercialized user data on a large scale. As the creators of private data, it is difficult for users to obtain the value they deserve from it. Users are usually unable to understand how their data is collected, processed and used, and it is difficult for them to decide the scope and method of data use. Excessive collection and use also lead to serious privacy risks.

For example, Facebook's Cambridge Analytica incident exposed how traditional data providers have huge loopholes in data usage transparency and privacy protection.

Data silos: In addition, real-time data from different sources and formats is difficult to integrate quickly, affecting the possibility of comprehensive analysis. A lot of data is often locked within the organization, limiting data sharing and innovation across industries and organizations. The data silo effect hinders cross-domain data integration and analysis.

For example, in the consumer industry, brands need to integrate data from e-commerce platforms, physical stores, social media and market research, but these data may be difficult to integrate due to the lack of uniformity or isolation of the platform. For another example, shared travel companies such as Uber and Lyft, although they all collect a large amount of real-time data from users on traffic, passenger demand and geographic location, cannot present and share these data due to competition.

In addition, there are also issues such as cost efficiency and flexibility. Traditional data vendors are actively responding to these challenges, but the emerging Web3 technology provides new ideas and possibilities for solving these problems.

Since the release of decentralized storage solutions such as IPFS (InterPlanetary File System) in 2014, a series of emerging projects have emerged in the industry to address the limitations of the traditional data ecosystem. We have seen that decentralized data solutions have formed a multi-layered, interconnected ecosystem that covers all stages of the data life cycle, including data generation, storage, exchange, processing and analysis, verification and security, as well as privacy and ownership.

Data Storage: The rapid development of Filecoin and Arweave proves that decentralized storage (DCS) is becoming a paradigm shift in the storage field. DCS solutions reduce the risk of single points of failure through distributed architecture, while attracting participants with more competitive cost-effectiveness. With the emergence of a series of large-scale application cases, the storage capacity of DCS has shown explosive growth (for example, the total storage capacity of the Filecoin network has reached 22 exabytes in 2024).

Processing and Analysis: Decentralized data computing platforms such as Fluence improve the real-time and efficiency of data processing through edge computing technology, which is particularly suitable for application scenarios with high real-time requirements such as the Internet of Things (IoT) and AI reasoning. Web3 projects use technologies such as federated learning, differential privacy, trusted execution environments, and fully homomorphic encryption to provide flexible privacy protection and trade-offs at the computing layer.

Data Market/Exchange Platform: In order to promote the quantification and circulation of data value, Ocean Protocol has created an efficient and open data exchange channel through tokenization and DEX mechanisms, such as helping traditional manufacturing companies (Daimler, the parent company of Mercedes-Benz) to cooperate in the development of a data exchange market to help share data in its supply chain management. On the other hand, Streamr has created a permissionless, subscription-based data stream network suitable for IoT and real-time analysis scenarios, and has shown excellent potential in transportation and logistics projects (for example, cooperation with the Finnish smart city project).

With the increasing frequency of data exchange and utilization, the authenticity, credibility and privacy protection of data have become key issues that cannot be ignored. This has prompted the Web3 ecosystem to extend innovation to the fields of data verification and privacy protection, giving rise to a series of groundbreaking solutions.

Many web3 technologies and native projects are working to solve the problems of data authenticity and private data protection. In addition to ZK, MPC and other technical developments are widely used, among which Transport Layer Security Protocol Notary (TLS Notary) as an emerging verification method is particularly worthy of attention.

Introduction to TLS Notary

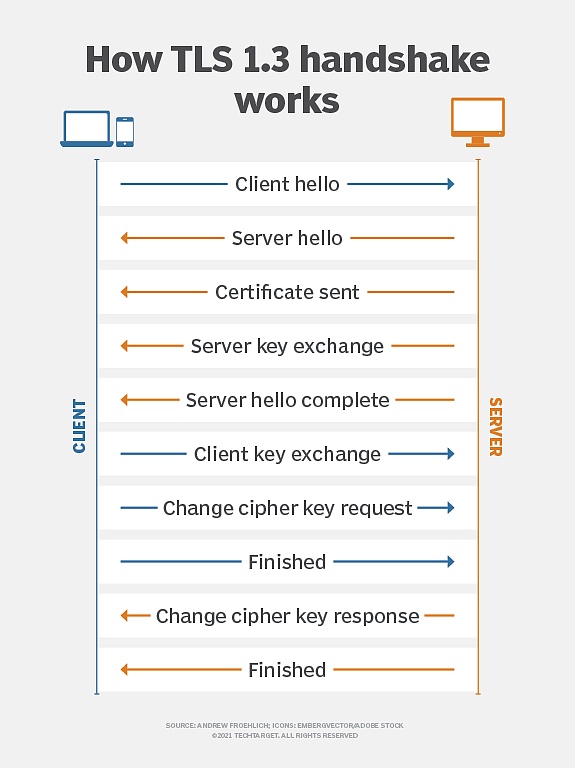

Transport Layer Security (TLS) is a widely used encryption protocol for network communications, designed to ensure the security, integrity and confidentiality of data transmission between clients and servers. It is a common encryption standard in modern network communications and is used in multiple scenarios such as HTTPS, email, and instant messaging.

(TLS Encryption Principle, Source: TechTarget)

When it was born ten years ago, the original goal of TLS Notary was to verify the authenticity of TLS sessions by introducing a third-party "notary" outside the client (Prover) and server.

Using key splitting technology, the master key of the TLS session is divided into two parts, held by the client and the notary respectively. This design allows the notary to participate in the verification process as a trusted third party, but cannot access the actual communication content. This notarization mechanism is designed to detect man-in-the-middle attacks, prevent fraudulent certificates, ensure that communication data has not been tampered with during transmission, and allow a trusted third party to confirm the legitimacy of the communication while protecting the privacy of the communication.

Thus, TLS Notary provides secure data verification and effectively balances verification requirements and privacy protection.

In 2022, the TLS Notary project was rebuilt by the Privacy and Extension Exploration (PSE) research lab of the Ethereum Foundation. The new version of the TLS Notary protocol was rewritten from scratch in Rust, incorporating more advanced cryptographic protocols (such as MPC). The new protocol features allow users to prove to third parties the authenticity of the data they received from the server without leaking the content of the data. While maintaining the original TLS Notary core verification function, the privacy protection capability has been greatly improved, making it more suitable for current and future data privacy needs.

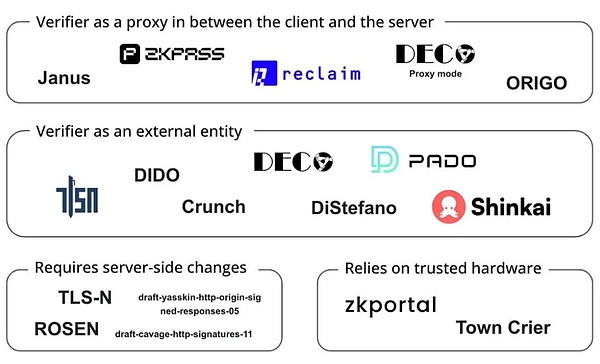

TLS Notary technology has continued to evolve in recent years, and has developed multiple variants based on it, further enhancing privacy and verification capabilities:

zkTLS: A privacy-enhanced version of TLS Notary, combined with ZKP technology, allows users to generate cryptographic proofs of web page data without exposing any sensitive information. It is suitable for communication scenarios that require extremely high privacy protection.

3P-TLS (Three-Party TLS): Introduces the client, server, and auditor, allowing auditors to verify the security of communications without revealing the content of the communication. This protocol is very useful in scenarios that require transparency but also require privacy protection, such as compliance reviews or audits of financial transactions.

Web3 projects use these encryption technologies to enhance data verification and privacy protection, break data monopolies, solve data silos and trusted transmission problems, and allow users to prove information such as social media account ownership, shopping records for financial loans, bank credit records, professional background and academic certification without leaking privacy, such as:

Reclaim Protocol uses zkTLS technology to generate zero-knowledge proofs for HTTPS traffic, allowing users to securely import activity, reputation and identity data from external websites without exposing sensitive information.

zkPass combines 3P-TLS technology to allow users to verify real-world private data without leakage. It is widely used in scenarios such as KYC and credit services, and is compatible with HTTPS networks.

Opacity Network is based on zkTLS, allowing users to securely prove their activities on various platforms (such as Uber, Spotify, Netflix, etc.) without directly accessing the APIs of these platforms. Achieve cross-platform activity proof.

(Projects working on TLS Oracles, Source: Bastian Wetzel)

As an important link in the data ecological chain, Web3 data verification has broad application prospects. The prosperity of its ecology is leading to a more open, dynamic and user-centric digital economy. However, the development of authenticity verification technology is only the beginning of building a new generation of data infrastructure.

Some projects combine the above-mentioned data verification technologies to make deeper explorations in the upstream of the data ecosystem, namely data traceability, distributed data collection and trusted transmission. The following focuses on several representative projects: OpenLayer, Grass and Vana, which have shown unique potential in building a new generation of data infrastructure.

OpenLayer is one of the a16z Crypto 2024 Spring Encryption Startup Accelerator projects. As the first modular real data layer, it is committed to providing an innovative modular solution for coordinating the collection, verification and transformation of data to meet the needs of both Web2 and Web3 companies. OpenLayer has attracted support from well-known funds and angel investors including Geometry Ventures and LongHash Ventures.

Traditional data layers face multiple challenges: lack of trusted verification mechanisms, limited access due to reliance on centralized architectures, lack of interoperability and liquidity of data between different systems, and no fair data value distribution mechanism.

A more concrete problem is that AI training data is becoming increasingly scarce today. On the public Internet, many websites have begun to adopt anti-crawler restrictions to prevent AI companies from crawling data on a large scale.

When it comes to private proprietary data, the situation is more complicated. Many valuable data are stored in a privacy-preserving manner due to their sensitive nature, and there is a lack of effective incentive mechanisms. Under this status quo, users cannot safely obtain direct benefits by providing private data, so they are reluctant to share this sensitive data.

To solve these problems, OpenLayer combines data verification technology to build a modular authentic data layer (Modular Authentic Data Layer), and coordinates the data collection, verification and conversion process in a decentralized + economic incentive manner, providing Web2 and Web3 companies with a more secure, efficient and flexible data infrastructure.

OpenLayer provides a modular platform to simplify the data collection, trusted verification and conversion process:

a) OpenNodes

OpenNodes is the core component responsible for decentralized data collection in the OpenLayer ecosystem. It collects data through users' mobile applications, browser extensions and other channels. Different operators/nodes can optimize returns by performing the most suitable tasks based on their hardware specifications.

OpenNodes supports three main data types to meet the needs of different types of tasks:

Publicly available Internet data (such as financial data, weather data, sports data, and social media streams)

User private data (such as Netflix viewing history, Amazon order records, etc.)

Self-reported data from secure sources (such as data signed by a proprietary owner or verified by specific trusted hardware).

Developers can easily add new data types, specify new data sources, requirements, and data retrieval methods, and users can choose to provide de-identified data in exchange for rewards. This design allows the system to be continuously expanded to adapt to new data needs. The diverse data sources enable OpenLayer to provide comprehensive data support for various application scenarios and also lower the threshold for data provision.

b) OpenValidators

OpenValidators is responsible for data validation after collection, allowing data consumers to confirm that the data provided by the user fully matches the data source. All provided verification methods can be cryptographically proven, and the verification results can be verified afterwards. There are multiple different providers for the same type of proof. Developers can choose the most suitable verification provider according to their needs.

In the initial use case, especially for public or private data from Internet APIs, OpenLayer uses TLSNotary as a verification solution to export data from any web application and prove the authenticity of the data without compromising privacy.

Not limited to TLSNotary, thanks to its modular design, the verification system can easily access other verification methods to adapt to different types of data and verification requirements, including but not limited to:

Attested TLS connections: Use the Trusted Execution Environment (TEE) to establish an authenticated TLS connection to ensure the integrity and authenticity of data during transmission.

Secure Enclaves: Use hardware-level secure isolation environments (such as Intel SGX) to process and verify sensitive data, providing a higher level of data protection.

ZK Proof Generators: Integrated ZKP, allowing the verification of data attributes or calculation results without leaking the original data.

c) OpenConnect

OpenConnect is the core module in the OpenLayer ecosystem responsible for data conversion and availability. It processes data from various sources and ensures interoperability between different systems to meet the needs of different applications. For example:

Convert data into the on-chain oracle format for direct use by smart contracts.

Convert unstructured raw data into structured data for preprocessing purposes such as AI training.

For data from users' private accounts, OpenConnect provides data desensitization to protect privacy, and also provides components to enhance security during data sharing and reduce data leakage and abuse. In order to meet the needs of applications such as AI and blockchain for real-time data, OpenConnect supports efficient real-time data conversion.

Currently, through integration with Eigenlayer, OpenLayer AVS operators listen to data request tasks, are responsible for grabbing data and verifying it, and then report the results back to the system, providing economic guarantees for their actions through EigenLayer staking or re-staking assets. If malicious behavior is confirmed, there will be a risk of confiscation of pledged assets. As one of the earliest AVS (active verification services) on the EigenLayer mainnet, OpenLayer has attracted more than 50 operators and $4 billion in re-staking assets.

In general, the decentralized data layer built by OpenLayer expands the scope and diversity of available data without sacrificing practicality and efficiency, while ensuring the authenticity and integrity of the data through encryption technology and economic incentives. Its technology has a wide range of practical use cases for Web3 Dapps seeking to obtain off-chain information, AI models that need to be trained and inferred with real inputs, and companies that want to segment and target users based on existing identities and reputations. Users are also able to value their private data.

Grass is a flagship project developed by Wynd Network, which aims to create a decentralized web crawler and AI training data platform. At the end of 2023, the Grass project completed a $3.5 million seed round of financing led by Polychain Capital and Tribe Capital. Then, in September 2024, the project received another round of A financing led by HackVC, with participation from well-known investment institutions such as Polychain, Delphi, Lattice and Brevan Howard.

We mentioned that AI training requires new data exposure, and one of the solutions is to use multiple IPs to break through data access permissions and feed data for AI. Grass started from this and created a distributed crawler node network, dedicated to collecting and providing verifiable data sets for AI training in a decentralized physical infrastructure manner using users' idle bandwidth. Nodes route web requests through users' Internet connections, access public websites and compile structured data sets. It uses edge computing technology for preliminary data cleaning and formatting to improve data quality.

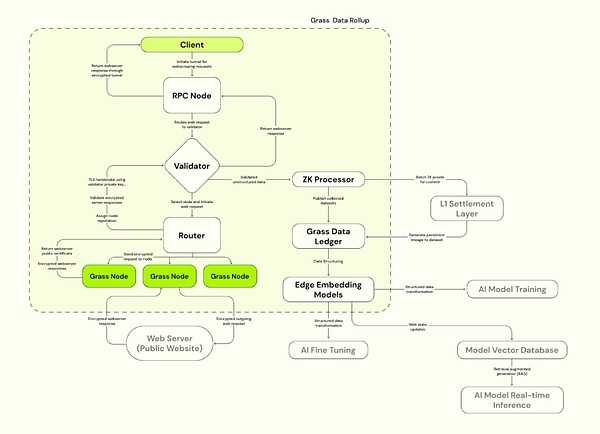

Grass adopts the Solana Layer 2 Data Rollup architecture, built on Solana to improve processing efficiency. Grass uses validators to receive, verify and batch web transactions from nodes, generating ZK proofs to ensure data authenticity. The verified data is stored in the data ledger (L2) and linked to the corresponding L1 on-chain proof.

a) Grass nodes

Similar to OpenNodes, C-end users install Grass applications or browser extensions and run them, using idle bandwidth for web crawler operations. Nodes route web requests through the user's Internet connection, access public websites and compile structured data sets, and use edge computing technology for preliminary data cleaning and formatting. Users are rewarded with GRASS tokens based on the bandwidth and data volume they contribute.

b) Routers

Connect Grass nodes and validators, manage the node network and relay bandwidth. Routers are incentivized to operate and receive rewards, and the reward ratio is proportional to the total verification bandwidth relayed through them.

c) Validators

Receive, verify and batch web transactions from routers, generate ZK proofs, use unique key sets to establish TLS connections, and select appropriate cipher suites for communication with target web servers. Grass currently uses centralized validators and plans to move to validator committees in the future.

d) ZK Processor

Receives proofs of each node session data generated from the validator, batches the validity proofs of all web requests and submits them to Layer 1 (Solana).

e) Grass Data Ledger (Grass L2)

Stores the complete data set and links to the corresponding L1 chain (Solana) for proof.

f) Edge Embedding Model

Responsible for converting unstructured web data into structured models that can be used for AI training.

Source: Grass

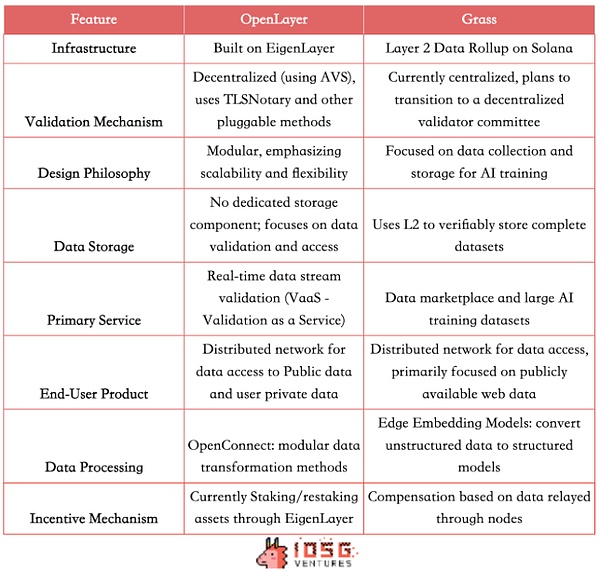

Both OpenLayer and Grass use distributed networks to provide companies with access to open Internet data and closed information that requires identity verification. The incentive mechanism promotes data sharing and the production of high-quality data. Both are committed to creating a decentralized data layer to solve the problem of data access and verification, but use slightly different technical paths and business models.

Differences in technical architecture

Grass uses the Layer 2 Data Rollup architecture on Solana, and currently adopts a centralized verification mechanism with a single validator. As one of the first AVS, Openlayer is built on EigenLayer and uses economic incentives and confiscation mechanisms to implement a decentralized verification mechanism. It also adopts a modular design, emphasizing the scalability and flexibility of data verification services.

Product differences

Both offer similar To C products, allowing users to realize the value of data through nodes. For To B use cases, Grass provides an interesting data market model and uses L2 to verifiably store complete data to provide AI companies with structured, high-quality, and verifiable training sets. OpenLayer does not have a dedicated data storage component for the time being, but provides a wider range of real-time data stream verification services (Vaas). In addition to providing data for AI, it is also suitable for scenarios that require rapid response, such as serving as an Oracle to feed prices for RWA/DeFi/prediction market projects, providing real-time social data, etc.

Therefore, Grass's target customer base is now mainly for AI companies and data scientists, providing large-scale, structured training data sets, and also serving research institutions and enterprises that need a large number of network data sets; while Openlayer is temporarily aimed at on-chain developers who need off-chain data sources, AI companies that need real-time, verifiable data streams, and Web2 companies that support innovative user acquisition strategies, such as verifying the usage history of competing products.

Potential competition in the future

However, considering the development trend of the industry, the functions of the two projects may indeed converge in the future. Grass may also provide real-time structured data in the near future. As a modular platform, OpenLayer may also expand to data set management with its own data ledger in the future, so the competition areas of the two may gradually overlap.

In addition, both projects may consider joining the key link of data labeling. Grass may advance faster in this regard because they have a large node network - reportedly more than 2.2 million active nodes. This advantage gives Grass the potential to provide reinforcement learning based on human feedback (RLHF) services, using a large amount of labeled data to optimize AI models.

However, OpenLayer, with its expertise in data verification and real-time processing, and its focus on private data, may maintain its advantage in data quality and credibility. In addition, as one of Eigenlayer's AVS, OpenLayer may have deeper development in decentralized verification mechanisms.

Although the two projects may compete in some areas, their respective unique advantages and technical routes may also lead them to occupy different niches in the data ecosystem.

(Source:IOSG, David)

4.3 VAVA

As a user-centric data pool network, Vana is also committed to providing high-quality data for AI and related applications. Compared with OpenLayer and Grass, Vana adopts a more different technical path and business model. Vana completed a $5 million financing in September 2024, led by Coinbase Ventures. It previously received $18 million in Series A financing led by Paradigm. Other well-known investors include Polychain, Casey Caruso, etc.

Originally launched as a research project at MIT in 2018, Vana aims to become a Layer 1 blockchain designed specifically for user private data. Its innovations in data ownership and value distribution enable users to profit from AI models trained on their data. The core of Vana is to realize the circulation and value of private data through trustless, private and attributable Data Liquidity Pool and innovative Proof of Contribution mechanism:

4.3.1. Data Liquidity Pool

Vana introduces a unique concept of Data Liquidity Pool (DLP): As a core component of the Vana network, each DLP is an independent peer-to-peer network for aggregating specific types of data assets. Users can upload their private data (such as shopping records, browsing habits, social media activities, etc.) to a specific DLP and flexibly choose whether to authorize the data to specific third parties. Data is integrated and managed through these liquidity pools. The data is de-identified to ensure user privacy while allowing the data to participate in commercial applications, such as AI model training or market research.

Users submit data to DLP and receive corresponding DLP tokens (each DLP has a specific token) as rewards, which not only represent the user's contribution to the data pool, but also give the user the right to govern the DLP and the right to distribute future profits. Users can not only share data, but also obtain continuous income from subsequent calls to the data (and provide visual tracking). Unlike traditional one-time data sales, Vana allows data to continuously participate in the economic cycle.

4.3.2. Proof of Contribution Mechanism

Another core innovation of Vana is the Proof of Contribution mechanism. This is Vana's key mechanism to ensure data quality, allowing each DLP to customize a unique contribution proof function based on its characteristics to verify the authenticity and integrity of the data and evaluate the contribution of the data to the performance improvement of the AI model. This mechanism ensures that users' data contributions are quantified and recorded, thereby providing rewards to users. Similar to "Proof of Work" in cryptocurrency, Proof of Contribution allocates benefits to users based on the quality, quantity and frequency of data contributed by users. Through automatic execution of smart contracts, contributors are ensured to receive rewards that match their contributions.

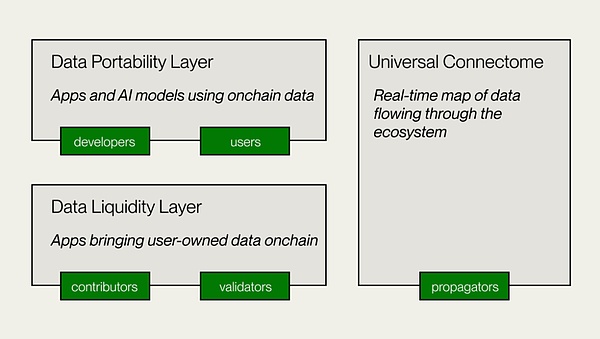

Data Liquidity Layer

This is Vana's core layer, responsible for data contribution, verification and recording to DLPs, introducing data as a transferable digital asset on-chain. DLP creators deploy DLP smart contracts to set data contribution purposes, verification methods and contribution parameters. Data contributors and custodians submit data for verification, and the Proof of Contribution (PoC) module performs data verification and value assessment, granting governance rights and rewards based on parameters.

Data Portability Layer

This is an open data platform for data contributors and developers, and also the application layer of Vana. The Data Portability Layer provides a collaborative space for data contributors and developers to build applications using the data liquidity accumulated in DLPs. Provides infrastructure for distributed training of User-Owned models and AI Dapp development.

Universal Connectome

A decentralized ledger and a real-time data flow graph throughout the Vana ecosystem, using Proof of Stake consensus to record real-time data transactions in the Vana ecosystem. Ensure the effective transfer of DLP tokens and provide cross-DLP data access for applications. Compatible with EVM, allowing interoperability with other networks, protocols, and DeFi applications.

(Source: Vana)

Vana provides a different path, focusing on the liquidity and value empowerment of user data. This decentralized data exchange model is not only suitable for scenarios such as AI training and data markets, but also provides a new solution for cross-platform interoperability and authorization of user data in the Web3 ecosystem, ultimately creating an open Internet ecosystem that allows users to own and manage their own data, as well as smart products created by this data.

5. The value proposition of decentralized data network

In 2006, data scientist Clive Humby said that data is the oil of the new era. In the past 20 years, we have witnessed the rapid development of "refining" technology. Technologies such as big data analysis and machine learning have unprecedentedly released the value of data. According to IDC's forecast, by 2025, the global data circle will grow to 163 ZB, most of which will come from individual users. With the popularization of emerging technologies such as IoT, wearable devices, AI and personalized services, a large amount of data required for commercial use in the future will also come from individuals.

Web3 data solutions break through the limitations of traditional facilities through a distributed node network, achieving more extensive and efficient data collection, while improving the efficiency of real-time acquisition and verification credibility of specific data. In this process, Web3 technology ensures the authenticity and integrity of data and can effectively protect user privacy, thereby achieving a fairer data utilization model. This decentralized data architecture promotes the democratization of data acquisition.

Whether it is the user node model of OpenLayer and Grass, or the monetization of user private data by Vana, in addition to improving the efficiency of specific data collection, it also allows ordinary users to share the dividends of the data economy, creating a win-win model for users and developers, allowing users to truly control and benefit from their data and related resources.

Through the token economy, Web3 data solutions have redesigned the incentive model and created a more equitable data value distribution mechanism. It has attracted a large number of users, hardware resources and capital injections, thereby coordinating and optimizing the operation of the entire data network.

Compared with traditional data solutions, they also have modularity and scalability: For example, Openlayer's modular design provides flexibility for future technology iterations and ecological expansion. Thanks to technical characteristics, the data acquisition method for AI model training is optimized to provide richer and more diverse data sets.

From data generation, storage, verification to exchange and analysis, Web3-driven solutions solve many of the drawbacks of traditional facilities through unique technical advantages, while also giving users the ability to monetize their personal data, triggering a fundamental change in the data economy model. As technology continues to evolve and application scenarios expand, the decentralized data layer is expected to become the next generation of critical infrastructure together with other Web3 data solutions, providing support for a wide range of data-driven industries.

This article will explore the redundancy mechanisms of Arweave and IPFS, and which option is safer for your data.

JinseFinance

JinseFinanceThis article explores how Arweave and IPFS store, maintain, and access files, and how this affects the reliability and durability of digital assets.

JinseFinance

JinseFinanceThis article re-examines some core issues in the current ETH ecosystem Restaking, AVS and Liquid Restaking, and predictively provides an analogous risk and return assessment framework.

JinseFinance

JinseFinanceIn the core mechanism of Arweave, there is a very important concept and component, which is the storage fund Endowment.

JinseFinance

JinseFinanceImagine that you upload a photo album of your family vacation to network storage today, and 200 years from now, your descendants can still view the photo album.

JinseFinance

JinseFinanceCurrently, the mainstream decentralized storage on the market includes Arweave, Filecoin, and Storj. Each of them has unique characteristics and design concepts.

JinseFinance

JinseFinanceThis technology aims to mitigate risks associated with manual handling of assets while maintaining a secure separation from online environments.

Alex

AlexIBM, a stalwart in software and IT consulting, is making waves in the web3 space with the introduction of the Hyper Protect Offline Signing Orchestrator.

Aaron

AaronSaylortracker's data, which tracks Michale Saylor's BTC addresses, reveals his's portfolio at a $7,102,706,533.19, marking a 33.37% gain, reaching an all-time high.

Brian

BrianDecentralized storage is an indispensable infrastructure for Web3. But at this stage, whether it is storage scale or performance, decentralized storage is still in its infancy and is far from centralized storage. This article selects some representative storage projects: Storj, Filecoin, Arweave, Stratos Network, Ceramic, summarizes and compares their performance, cost, market positioning, market value and other information, and analyzes the technical principles, Ecological progress is summarized.

链向资讯

链向资讯